- Home/

- News

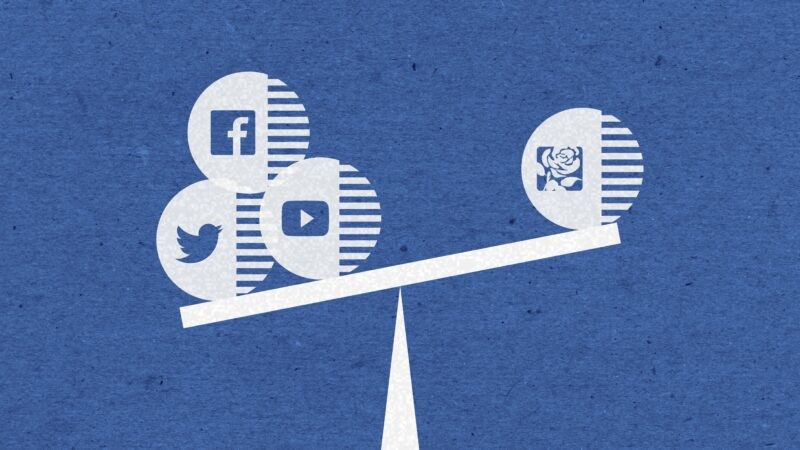

How should we regulate online extremism?

Author: Sir Ivor Roberts

A month after the horrific attack in Christchurch, which was live-streamed on Facebook, New Zealand Prime Minister Jacinda Ardern said: “It’s critical that technology platforms like Facebook are not perverted as a tool for terrorism, and instead become part of a global solution to countering extremism.” We wholeheartedly agree.

Neo-Nazi and other far-right material, alongside Islamist and far-left content, spread swiftly on Facebook, with a potential to reach thousand in a matter of hours. Facebook – the third most visited website on the Internet, and the largest social media network in the world – is not alone. Social media platforms have been used by extremists to radicalise others and inspire acts of terrorism across the world. Recent examples from the UK include Darren Osborne, who, after being radicalised online, drove his van into a crowd of worshippers near the Finsbury Park Mosque.

Salman Abedi, who committed the terror attack in the Manchester Arena in May 2017, had been radicalised watching ISIS videos on Twitter. Roshonara Choudhry, who in 2010 attempted to kill Labour MP Stephen Timms, had been radicalised by Al-Quaeda recruiter Anwar al-Awlaki whose lectures she found online. Exposure to online extremism is not the sole cause of radicalisation, but in combination with other risk factors, it can weaponise a latent disposition towards terrorist violence.

Preventing online extremism has become a priority for policy-makers in Europe. Germany was the first to legislate against extremist content in 2018, with its NetzDG Law, and earlier this year the EU has put forward a proposal for a regulation on preventing the dissemination of terrorist content online. In the UK, the Home Office and DCMS have proposed to regulate internet platforms in the online harms white paper, which considers a much wider range of harms than extremism and terrorism, such as bullying, child sexual exploitation and gang-related content.

Given the stakes described above, a crucial design feature of any online counter-extremism technology is the capacity to identify extreme content quickly enough, given the vast amount of data uploaded to the internet. A clear definition can prevent uncertainty and over-blocking, and help ensure content is judged consistently by human moderators, rather than leaving it to individual social media automated platforms to determine what is acceptable when it comes to terrorist content.

Once human moderators have determined something is extremist content, platforms should use hashing technology to screen out known extremist content at the point of upload. One example of such technology is the Counter Extremism Project’s eGlyph – a tool developed by Hany Farid, a Professor of Computer Science at the University of California, Berkeley and member of the CEP’s advisory board. eGlyph is based on ‘robust hashing’ technology, capable of swiftly comparing uploaded content to a database of known extremist images, videos, and audio files, thereby disrupting the spread of such content. We have made this ground-breaking technology available at no cost to organisations wishing to combat online violent extremism.

Use of these technologies is critical because a video can gather hundreds of views, and spread onto smaller, encrypted platforms — where it will be nearly impossible to find — in a matter of hours. In the past few years, extremists have become increasingly active in encrypted platforms — a process that poses a challenge to law enforcement agencies. The regulator should therefore mandate that end-to-end encryption platforms use specific encryption protocols (i.e. partially- or fully-homomorphic encryption) that allow for the use of hashing technology to detect known extremist content, or deploy hashing technology at the point of transmitting a message. These will allow companies to detect known harmful material, while maintaining users’ privacy.

Most importantly, this legislation needs to be flexible enough to protect vulnerable people as technology changes, while at the same time maintaining free speech, privacy and freedom of expression. This balance is fundamental to the governance of a medium that has undeniably transformed the lives and fortunes of people across the planet denied access to the freedom and empowerment unfettered information can bring. It is important to strike the right balance between safety and rights but it is never simple. While social media regulation should be no different from offline norms, including prohibitions on inciting violence, hate crimes and acts of terrorism, the question of who interprets and regulates what behaviour on transnational platforms remains fraught with difficulty and at risk of illiberal interference.

Clearly then, when considering the international nature of social media platforms and their reach, this should be a multi-national effort. It should also include not only legislation, but education – promoting inclusion, tolerance and the sort of critical thinking skills young people require to navigate the sometimes dangerous and seductive online world. Parliamentarians on all sides should work with social media companies to tackle the lethal threat posed by violent extremism – a challenge that will survive the upheaval of Brexit and beyond.

About Author: Sir Ivor Roberts is a former British diplomat and senior adviser at the Counter Extremism Project.

Source: Link

Development of specialized PCVE web site is funded by EU FUNDS CN 2017-386/831 - "IPA II 2016 Regional Action on P/CVE in the Western Balkans"

Development of specialized PCVE web site is funded by EU FUNDS CN 2017-386/831 - "IPA II 2016 Regional Action on P/CVE in the Western Balkans"